Inclusive Futures brings together research inquiry and community collaboration to interrogate what “responsibility” in AI can mean when centred on equity and justice.

The three main pathways of the project — UnConference Dialogues, Critical AI Literacy, and Speculative Practice — explore how AI can be collectively questioned, reimagined, and refused.

Across these inter-connected approaches, collaborative activities have been created for critically engaging with technology. Together, they aim to trouble dominant narratives of innovation and reframe technology as accountable to the people it most affects. The project pathways invite us to challenge AI in its current forms, and explore possibilities of “re-booting” AI to build just futures.

1. UnConference Dialogues

Drawing on the networks of BLAST Fest, the UnConference brought together community organisers, third-sector organisations, artists, technologists, and researchers to question what “responsible AI” looks like when grounded in lived experience rather than corporate or institutional perspectives.

As a participatory event, the UnConference created a collaborative space for dialogue and debate. Participants shared ideas and provocations, mapped ethical challenges, and highlighted principles for developing AI differently.

From these collective discussions emerged themes that contributed to the production of the AI Manifesto for Social Justice. The Manifesto recognizes that AI can reproduce and reinforce social hierarchies and systems of exclusion and domination. It calls for decolonizing knowledge, designing for equity, and acknowledging the right to refuse harmful systems.

As a “living framework,” the Manifesto will continue to develop through ongoing dialogue across the project’s pathways and beyond—linking research, creative practice, and community engagement.

2. Critical AI Literacy

Building on the Manifesto’s principles, the Critical AI Literacy strand of Inclusive Futures aimed to transform complex and opaque debates about AI into collaborative learning. Developing AI literacy is more than gaining knowledge—it also involves critically questioning, undoing, and reimagining dominant narratives about AI. Through participatory workshops, participants explored how AI systems are built and how they shape everyday life, combining social reflection with creativity.

The Data Playbook

Led by Toju Duke (Diverse AI), The Data Playbook workshop gathered participants from diverse backgrounds and interests. Through hands-on activities and discussion, participants examined how computer vision datasets for AI are created, governed, and used — raising questions about “fairness”, consent, transparency, and accountability.

Working in small groups, participants mapped the journeys of data using real-world scenario prompts and examples. They discussed who collects data, for what purposes, and how context, bias, and power shape these processes. These activities turned abstract ideas about data ethics into tangible, meaningful issues.

The workshop highlighted the political dimensions of data, encouraging participants to consider who benefits from extractive AI systems and who bears the costs. The aim was for participants to gain a stronger sense of agency, to question and influence how AI is deployed in their communities and workplaces.

Zine-Making

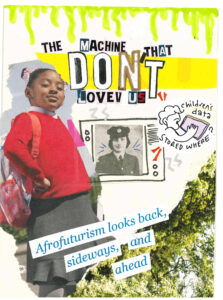

Co-facilitated by dipuk panchal and supported by BLAST Fest (Anita Shervington), this second workshop turned to Zine creation as a collective way of thinking, sharing, and learning — creating a resource for promoting critical AI literacy.

Rather than the zine merely being an output, the process of making became a space for dialogue and reflection. Participants gathered to explore how AI surfaces in their daily lives; from the technologies they use to the ways they are represented or excluded. Contributors experimented with combining their reflections with materials such as sketches, newspaper cuttings, and book covers, alongside using elements from the AI Manifesto.

AI literacy was understood not merely about gaining knowledge, but also for developing the capacity to reimagine how AI shapes our lives. The zine-making process was a practice to collectively do critical thinking about technology where ideas took material and creative form.

The zine embodies the project’s commitment to non-extractive, community-centred knowledge-making — a form of grassroots publishing that gives voice to lived experience. It invites readers to encounter AI not as an abstract system but as something that can be questioned and imagined otherwise.

3. Speculative Practice: The AI Gaze

The third part of Inclusive Futures project focuses on speculative artistic practice as a way of deconstructing how AI “sees,” classifies, and governs. Under the shared title The AI Gaze, this project pathway explores how machine vision is entangled with racialized power.

In collaboration with artist Kiki Shervington-White, two companion works — Closer to Go(o)d? and Colour Decoded — reveal the hidden processes of AI vision. Building on accounts of computer vision raised in the Data Playbook workshop, these works extend the project’s concern with ethics and justice, using speculative visual practices to expose how technologies of seeing shape who is made visible, who is obscured, and which perspectives define who we are.

Closer to Go(o)d? (Film)

Developed as part of the Inclusive Futures project and additionally supported through a BRAID Artist Commission, this film challenges the myth of AI as an all-seeing, “god-like” intelligence. Drawing on Afrofuturist thought, archival footage, glitch aesthetics, and collage from the zine, it explores how colonial systems of classification persist within contemporary algorithmic technologies.

The film invokes the West African philosophy of Sankofa — learning from the past to shape more equitable futures. By combining community-based reflections with visual experimentation, it invites audiences to look beyond AI’s supposed objectivity and to imagine possibilities for technologies grounded in care and justice.

Colour Decoded (Installation)

Colour Decoded is an interactive face-detection installation, collectively developed by Kiki Shervington-White, Luca Zheng (system programmer/designer) and Sanjay Sharma. The installation visualizes how AI systems spuriously infer race/ethnicity from facial features.

Visitors stand before a live camera feed while dynamic colour overlays and shifting data bars make visible the normally unseen facial areas that the AI gaze deems significant in determining identity.

As these patterns fluctuate, they reveal the instability and illegitimacy of algorithmic classification — demonstrating that such systems do not uncover a (non-existent) biological truth about identity, but rather reproduce racialized constructs of difference.

Colour Decoded seeks to highlight and deconstruct the colonial histories of classification embedded in machine vision and invites audiences to ask: How are you seen? Which identity categories are you forced into? Who remains excluded?